Research

Vision

My Fusion Intelligence Laboratory aims to use machine intelligence and multi-source information fusion to benefit humanity.

Research areas

My research aims to use machine intelligence and multi-source information fusion to benefit humanity by developing multimodal and embodied AI systems that can understand complex environments, assist humans in daily life, and improve decision-making in healthcare, with a strong emphasis on trustworthiness, robustness, and privacy protection. Specifically, my research areas include

- Multimodal Learning and Image Fusion. Visible–infrared fusion, multi-focus and multi-exposure fusion, multimodal medical imaging, and cross-modal representation learning.

- Human-Centered Computer Vision. Pedestrian tracking, human pose estimation, and behavior prediction with a focus on safety, robustness, and privacy protection.

- Embodied Intelligence. My lab has robots and various sensors to work on Embodied Intelligence.

- Trustworthy and Ethical AI. Generative-AI-based pedestrian privacy protection, adversarial robustness, and AI alignment in embodied systems.

- AI for Healthcare. Multimodal medical data fusion for diagnostic assistance.

Some funded projects

- The Royal Society International Exchanges grant (with NSFC), PI

- The Royal Society Research Grant, PI

- Exeter-Fudan Fellowship, PI

- Exeter-Université Paris-Saclay Seed Fund Grant , PI

- UKRI AIRR Gateway project, PI

- QUEX Joint PhD Scholarship, Primary Supervisor at University of Exeter

- Marie Sklodowska-Curie Individual Fellowship, PI

- Shanghai Science and Technology Committee Research Project, PI

Research topics

1. Multimodal Learning and Image Fusion

Related publications:

- X. Zhang, Y. Demiris. Visible and Infrared Image Fusion using Deep Learning, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 8, pp. 10535-10554, 2023. (ESI Highly Cited Paper, ESI hot paper)

- X. Zhang. Deep Learning-based Multi-focus Image Fusion: A Survey and A Comparative Study, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, No. 9, pp. 4819 – 4838, 2022. [Link] (ESI Highly Cited Paper)

- X. Zhang. Benchmarking and Comparing Multi-exposure Image Fusion Algorithms. Information Fusion, vol. 74, pp. 111-131, 2021. (The first multi-exposure image fusion benchmark) [Benchmark link]

- X. Zhang, P. Ye, H. Leung, K. Gong, G. Xiao. Object Fusion Tracking Based on Visible and Infrared Images: A Comprehensive Review. Information Fusion, vol. 63, pp. 166-187, 2020.

- X. Zhang, P. Ye, G. Xiao. VIFB: A Visible and Infrared Image Fusion Benchmark, In the Proceedings of IEEE/CVF Conference on Computer Vision Workshops, 2020. (The first image fusion benchmark, which has been utilized by researchers from more than 10 countries.) [Benchmark link]

- X. Zhang, P. Ye, S. Peng, J. Liu, G. Xiao. DSiamMFT: An RGB-T fusion tracking method via dynamic Siamese networks using multi-layer feature fusion. Signal Processing: Image Communication, vol. 84, 2020.

- X. Zhang, P. Ye, D. Qiao, J. Zhao, S. Peng, G. Xiao. Object Fusion Tracking Based on Visible and Infrared Images Using Fully Convolutional Siamese Networks. In Proceedings of the 22nd International Conference on Information Fusion, 2019.

- X. Zhang. “Multi-focus image fusion: A benchmark.” arXiv preprint arXiv:2005.01116 (2020). (The first multi-focus image fusion benchmark in the community)

- Z. Zhao, A. Howes, X. Zhang*. MultiTaskVIF: Segmentation-oriented visible and infrared image fusion via multi-task learning. arXiv preprint arXiv:2505.06665. [Link]

- H.Li, X. Liu, W. Kong, X. Zhang*. FusionCounting: Robust visible-infrared image fusion guided by crowd counting via multi-task learning. arXiv preprint arXiv:2508.20817. [Link]

- Q. Li, Z. Zhao, X. Zhang*. Brain tumor segmentation using multimodal MRI. DIFA 2025 Workshop, BMVC2025.

- Z. Zhao, X. Zhang*. SSVIF: Self-Supervised Segmentation-Oriented Visible and Infrared Image Fusion. arXiv preprint arXiv:2508.20817. [Link]

- G. Xiao, D.P. Bavirisetti , G. Liu , X. Zhang, Image Fusion, Springer Nature Singapore and Shanghai Jiao Tong University Press, 2020. This book has won the National Science and Technology Academic Publications Fund of China (2019).

- X. Zhang. Intelligence of Fusion: Deep Learning-based Image Fusion (written in Chinese). 2025. This book is available here.

* Corresponding authors

2. Human-Centered Computer Vision

(1) Pedestrian Trajectory Prediction

(2) Pedestrian Crossing Intention Prediction

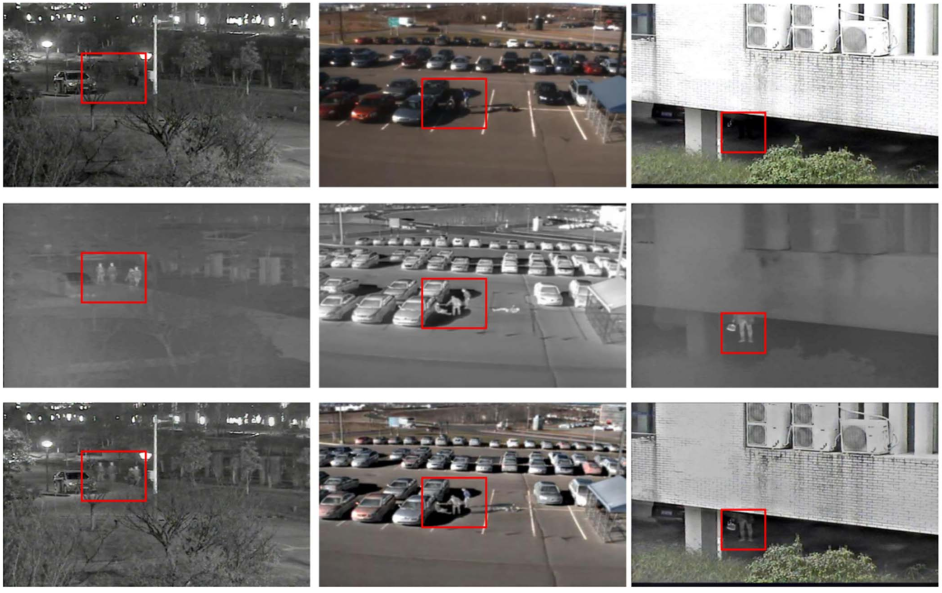

(3) Pedestrian Tracking

![]()

(4) Crowd Counting

Related publications:

- X. Zhang*, P. Angeloudis, Y. Demiris. ST CrossingPose: A Spatial-Temporal Graph Convolutional Network for Skeleton-based Pedestrian Crossing Intention Prediction, IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 11, pp. 20773-20782, 2022.

- X. Zhang*, P. Angeloudis, Y. Demiris. Dual-branch Spatio-Temporal Graph Neural Networks for Pedestrian Trajectory Prediction, Pattern Recognition, vol. 142, 2023.

- H. Li, Z. Wang, W. Kong, X. Zhang*. SelectMOT: Improving Data Association in Multiple Object Tracking via Quality-Aware Bounding Box Selection. IEEE Sensors Journal, vol. 25, no. 11, pp. 28607-28617, 2025.

- X. Zhang*, Y. Demiris. Self-Supervised RGB-T Tracking with Cross-Input Consistency. arXiv preprint arXiv:2301.11274 (2023).

- J. Liu, P. Ye. X. Zhang*, G. Xiao. Real-time long-term tracking with reliability assessment and object recovery. IET Image Processing, vol. 15, no. 4, pp. 918-935, 2021.

- J. Liu, G. Xiao, X. Zhang*, P. Ye, X. Xiong, S. Peng. Anti-occlusion object tracking based on correlation filter. Signal, Image and Video Processing, vol. 14, no. 4, pp. 753-761, 2020.

- J. Zhao, G. Xiao*, X. Zhang*, D. P. Bavirisetti. An improved long-term correlation tracking method with occlusion handling. Chinese Optics Letters, vol. 17, no. 3, pp. 031001-1: 031001-6, 2019.

- H. Li, T. Liao, W. Kong, X. Zhang*. MCIVA: A Multi-View Pedestrian Detection Framework with Central Inverse Nearest Neighbor Map and View Adaptive Module. Information Fusion, 2026.

- H.Li, X. Liu, W. Kong, X. Zhang*. FusionCounting: Robust visible-infrared image fusion guided by crowd counting via multi-task learning. arXiv preprint arXiv:2508.20817. [Link]

3. Embodied Intelligence, especially robot perception

More info to come.

4. Trustworthy and Ethical AI

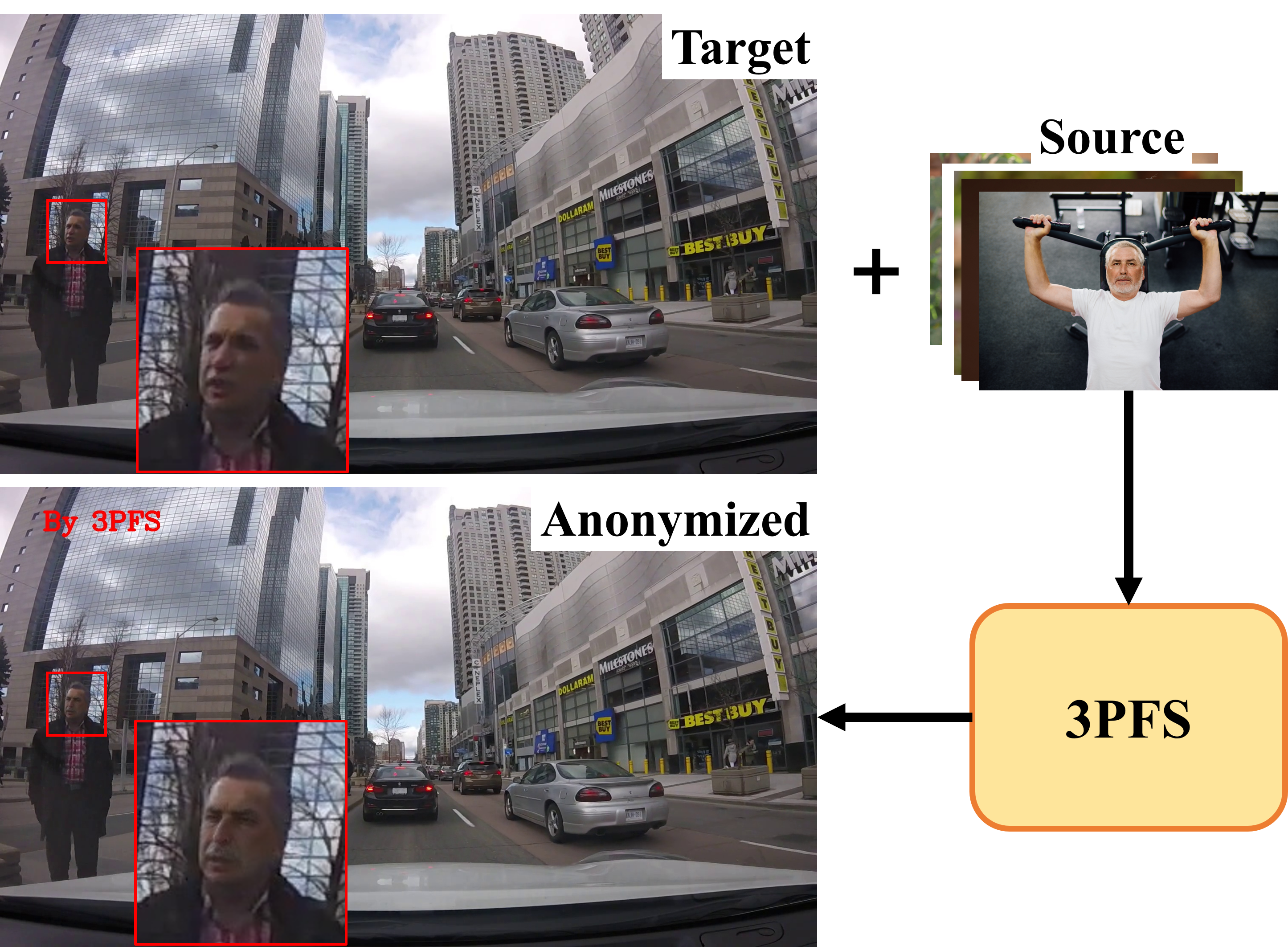

(1). Pedestrian Privacy Protection

Many videos are captured to train AI models. We aim to protect pedestrian privacy in videos captured by cameras mounted on robots and vehicles while maintaining the utility of the anonymized videos.

Related publications:

- Z. Zhao, X. Zhang*, Y. Demiris. 3PFS: Protecting pedestrian privacy through face swapping, IEEE Transactions on Intelligent Transportation Systems, vol. 25, no. 11, pp. 16845-16854, 2024.

- X. Zhang*, Z. Zhao. More effort is needed to protect pedestrian privacy in the era of AI. NeurIPS, 2025. Oral paper.

(2) AI security

Related publications:

- H.Li, X. Liu, W. Kong, X. Zhang*. FusionCounting: Robust visible-infrared image fusion guided by crowd counting via multi-task learning. arXiv preprint arXiv:2508.20817. [Link]

5. AI for Healthcare

Ongoing collaborative projects supported by an Exeter-Fudan Fellowship. More info to come in the future.

Related publications:

- Q. Li, Z. Zhao, X. Zhang*. Brain tumor segmentation using multimodal MRI. DIFA 2025 Workshop, BMVC2025.

6. Others

(1) AI for Social Good

Related publications:

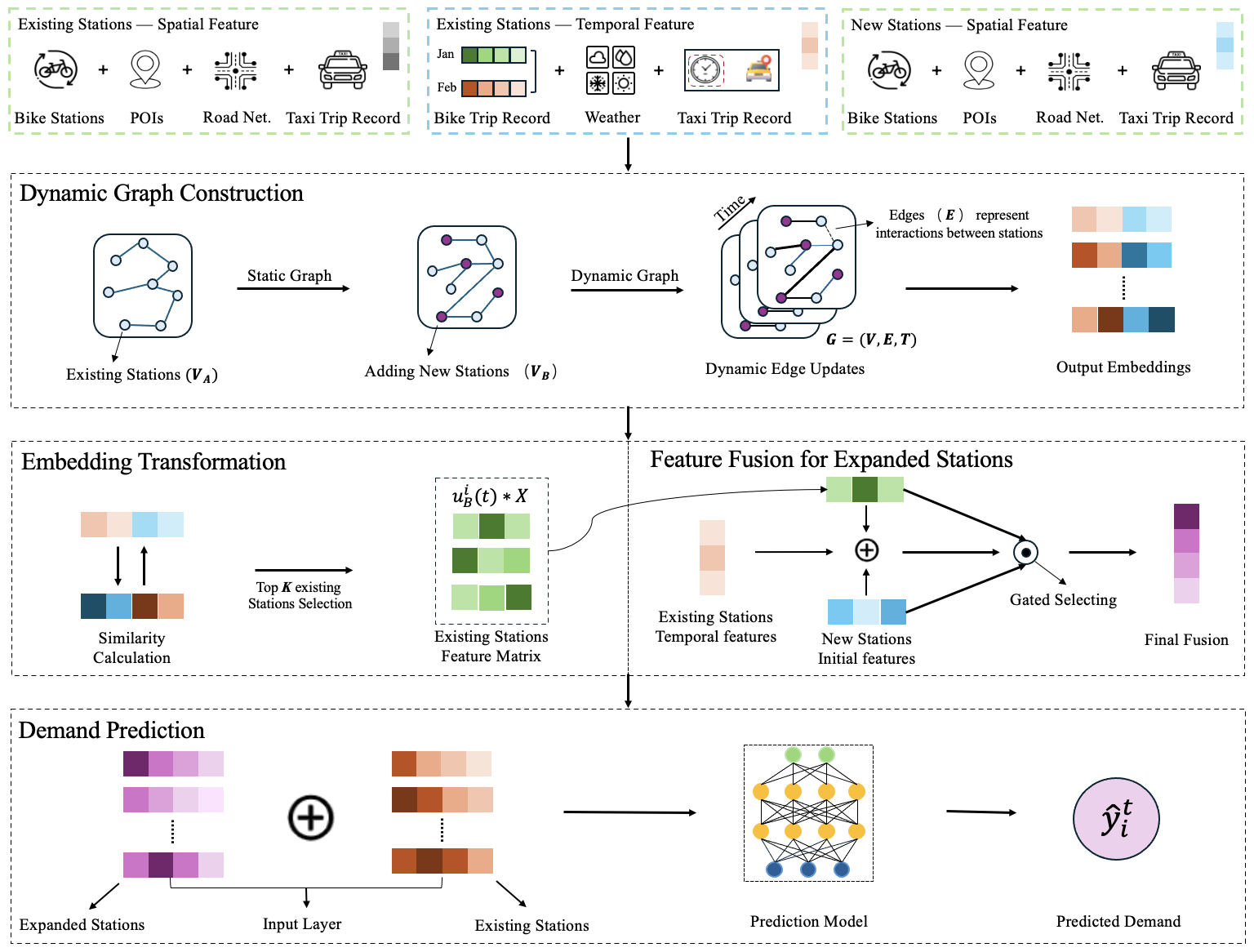

- Y. Zhao, H. Wen, X. Zhang, M. Luo. BGM: Demand Prediction for Expanding Bike-Sharing Systems with Dynamic Graph Modeling, IJCAI2025.

(2) AI for Science

Ongoing collaborative projects. More info to come in the future.